Integration with Langfuse

Langfuse is an open-source observability and analytics platform designed for LLM applications. It helps teams monitor, evaluate, and improve their LLM implementations through comprehensive tracing and evaluation tools.Key Features

Observability

- Detailed Tracing: Capture and analyze every LLM interaction, including:

- Prompts and completions

- Token usage and costs

- Latency metrics

- Metadata and custom properties

- Structured Logging: Track complex chains of LLM calls with nested traces

- Real-time Monitoring: View live performance metrics and usage patterns

Evaluation & Scoring

- Quality Metrics: Track and measure LLM output quality through:

- Model-based evaluations

- User feedback collection

- Custom scoring criteria

- Score Analysis: Analyze quality scores across different:

- Models

- Prompt versions

- Use cases

- Performance Tracking: Monitor success rates and identify areas for improvement

Using Langfuse with Inferable

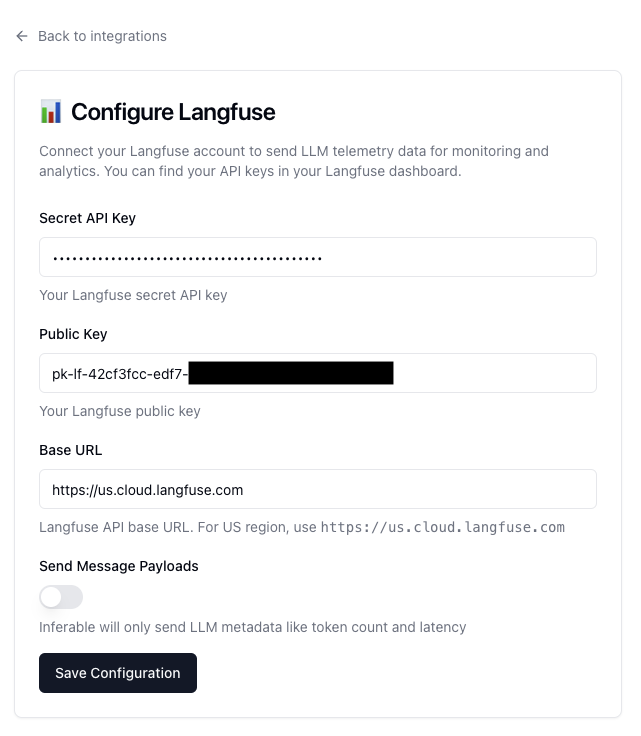

Inferable provides native integration with Langfuse, allowing you to get complete telemetry for your Runs. To enable Langfuse integration:- Get your Langfuse API keys from the Langfuse dashboard

- Configure Inferable with your Langfuse credentials in the Integrations tab of your preferred cluster.

Configuration

- Secret API Key: Your Langfuse Secret API Key.

- Public API Key: Your Langfuse Public API Key.

- Base URL: Your Langfuse Base URL. Depending on your deployment and data region, this will be something like

https://<region>.cloud.langfuse.com. - Send Message Payloads: Whether to send inputs and outputs of LLM calls and function calls to Langfuse.

Message Payloads

By default, Inferable will only send metadata about LLM calls and function calls. This includes the model, Run ID, token usage, latency etc. If you have Send Message Payloads enabled, Inferable will also send the inputs and outputs of the LLM calls and function calls. This includes the prompt, response, tool calls, tool call arguments, tool call results etc.Features

Tracing

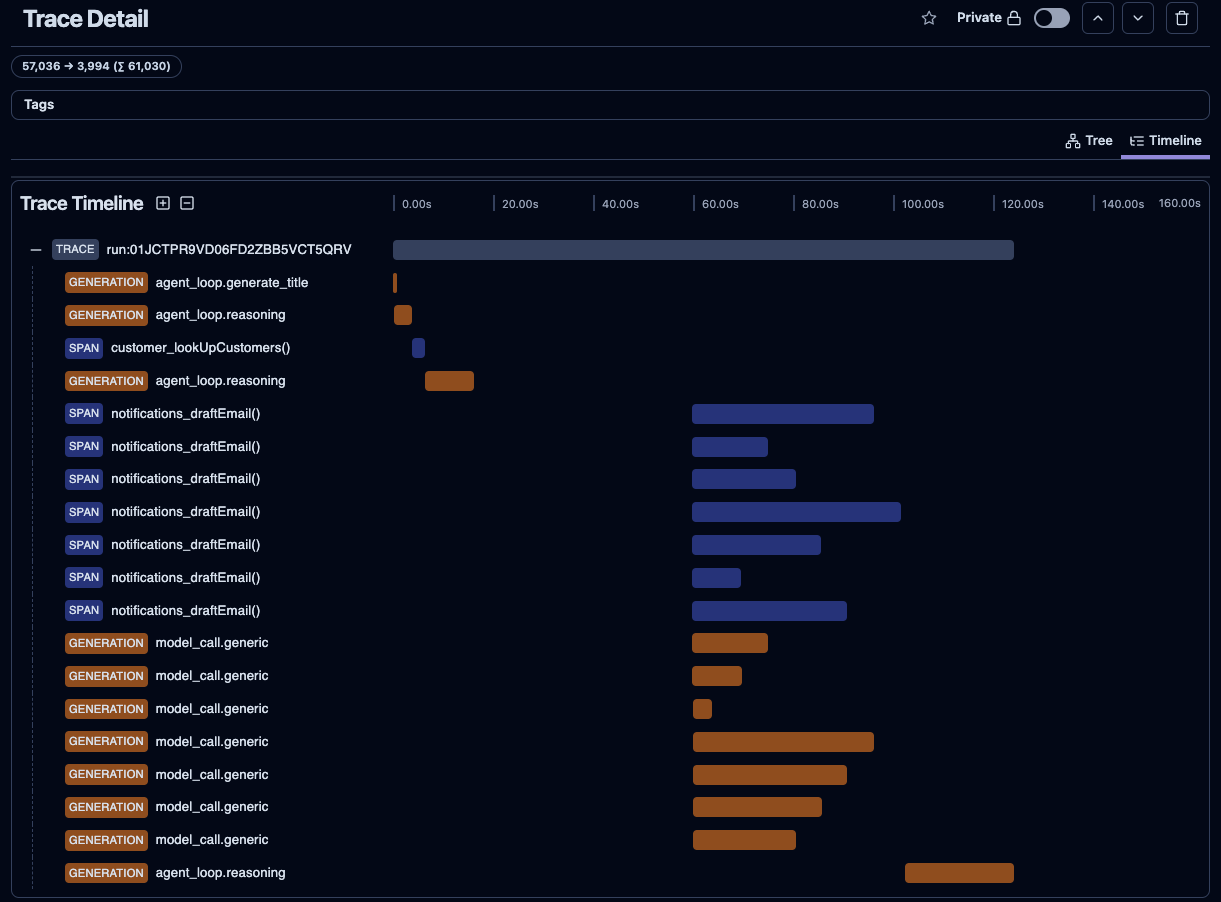

Once you have enabled Langfuse integration, you will start to see traces in the Langfuse dashboard. Every Run in Inferable will be mapped to its own trace in Langfuse. You will find two types of spans in the trace:

You will find two types of spans in the trace:

- Tool Calls: Denoted by function name. These are spans created for each tool call made in the Run by the LLM.

- LLM Calls: Denoted by

GENERATION. This is the span created for the LLM call. Inferable will create a new span for each LLM call in the Run, including:- Agent loop reasoning

- Utility calls (e.g. Summarization, Title generation)

Evaluation

Whenever you submit a evaluation on a Run via the Playground or the API, we will send a score to Langfuse on the trace for that Run. If you’re using Langfuse for evaluation, this will help you correlate the evaluation back to the specific Trace in Langfuse.